Blog

Case studies, strategies, and ideas shaping modern technology.

Technology Musings Episode 7

This episode's musings are about security-in-depth with a daft analogy of Google Cloud's VPC Service Controls. Making use of multiple technologies to secure your IT.

VPC SC Perimeters

Just recently, we've been working with a couple of clients on implementing and reworking Google Cloud VPC Service Controls (VPC-SC). If you aren't aware of this service from Google, it is a technology that allows you to surround your resources accessible through Google APIs with a protective perimeter. This is not VMs or apps which are accessible over the Internet but Dataflow pipelines or BigQuery datasets. You can think of it a little bit like an advanced firewall for your Google Cloud resources.

VPC-SC is a great product to add another layer to your security-in-depth strategy but it can be quite complex so I wanted to offer a short article, with the aid of a silly analogy and some terrible drawings, to hopefully give an easy to remember way of thinking about a complicated topic.

Let's start off with briefly describing some components

- Perimeters. There are actually 2 types of perimeters. Regular and bridges. However, for the purpose of this article we'll keep them separate. Perimeters are walls which you can put around your cloud resources to stop things getting to them or them getting out. A bit like a firewall. By default, when created, they don't stop anything.

- Bridges. A project can only be in one perimeter at a time, so if you need projects in different perimeters to communicate, you'll need to create a bridge between them.

- Ingress/egress (directional) policies. Bridges are very simple things and have no fine grained control over what goes back and forth. I/E policies can be applied to individual perimeters and define granular rules of what goes in and what comes out.

- Access Levels. An access level is a lot like a profile of some source location which can be added to a perimeter or an I/E rule. They can contain combinations of types of sources, like service account identities and IP addresses. They can also define device types as a source or even include other access levels to we can group them together.

Our descriptions out of the way, let's get into our silly analogy.

Build a wall

First of all, let's start off by imagining our Google Cloud projects as small villages that offer services to people inside and outside of the village. Let's say we have 2 villages. Village 1 has a compute workshop, which only they can use within the village, and a storage depot which they want to allow external access to. Then, village 2 has a BigQuery workshop and they want to allow select access to it externally.

So, the first thing we're going to do is put up two walls. One around each village...

There we go. Two walls around two villages. We could add a single wall around both villages, whereby all access to services would be available between villages, but two works well for the example.

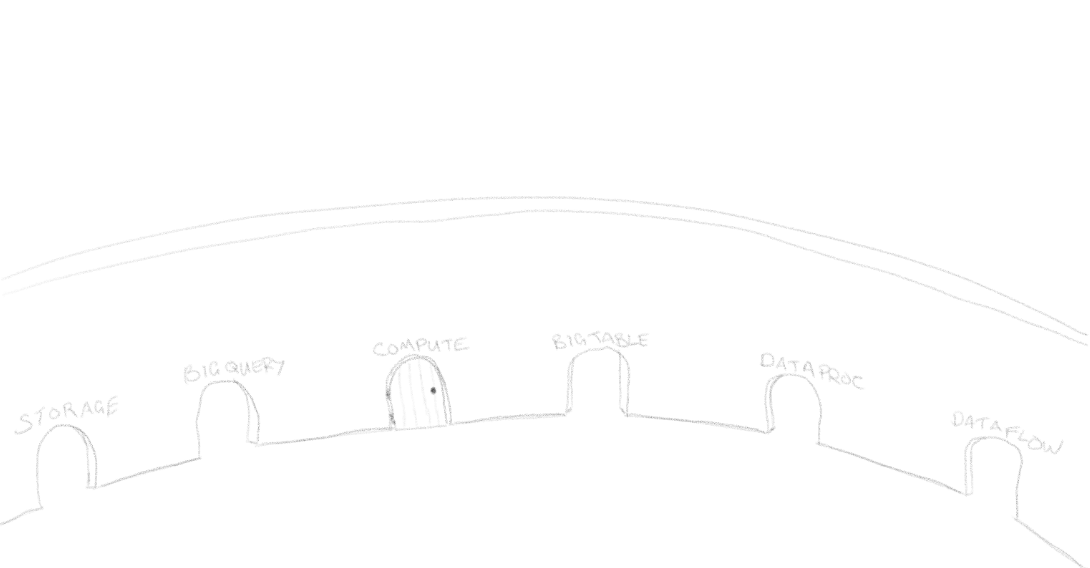

Now, by default adding a perimeter doesn't restrict any access unless you add any services to its restricted services list. So our wall has lots of open doorways and paths to the village workshops. If you add a service to the list, it effectively closes the door to and from the workshop it represents so that the path in and out of the perimeter wall is blocked.

Above, only the Compute workshop has been restricted by closing the Compute doorway on our wall. For our example, we're going to close all of the doors to prevent malicious outsiders accessing anything inside the village, and bad actors inside sending things out (data loss prevention). In reality you may not want to restrict all services but this is a good place to start.

"But what about Identity and Access Management? Doesn't that provide access control like this?" I don't hear you ask. Well, our standard IAM is like a set of keys to those workshops, each key opening up more access to the shop (user roles through to admin roles). Whereas adding a perimeter is like adding a wall around our village. If someone gets hold of your keys, they can get into your workshop without any issues but if there is also a big wall around the workshop, it's more difficult.

To summarise where we are now. We have:

- 2 villages (Projects)

- 1 wall (Perimeter) around each village, with all doors (Cloud Services) closed and preventing access in or out

Bridge a gap

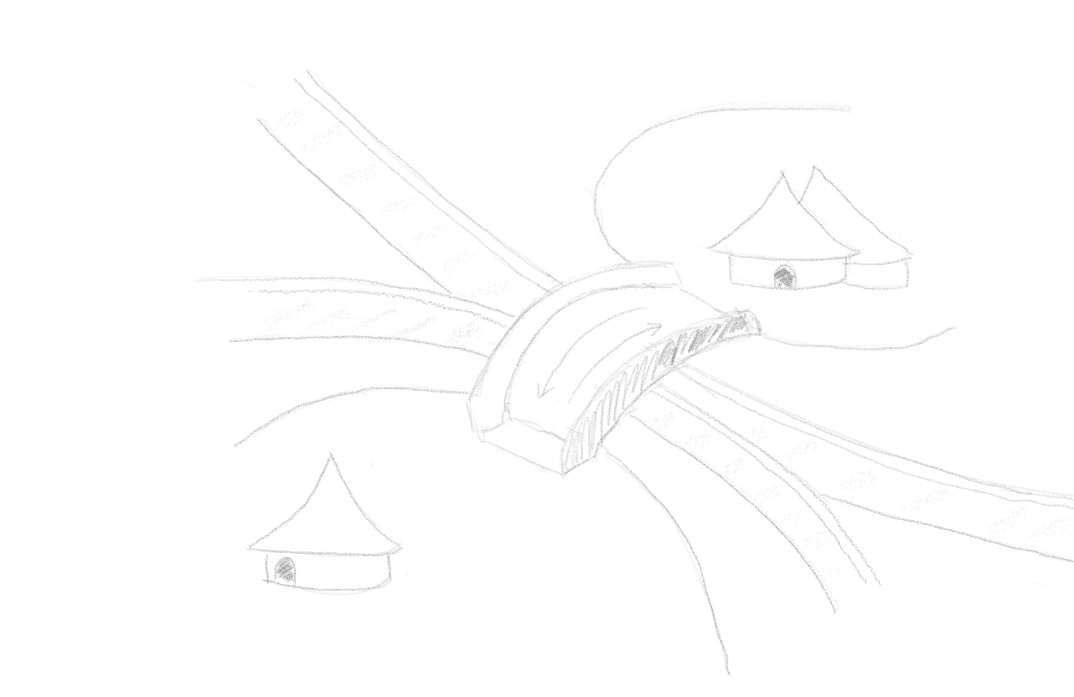

At this stage, even our two villages can't work with each other any longer; but just like the UK and EU they're great friends (ahem!), so we're going to drop a bridge over the top of the perimeter walls with one end in one village and one end in the other. As pointed out at the start, bridges are simple, so when we do this everyone in each village can cross over it to get to all the shops on the other side.

At this stage we haven't made any other external access changes. We've kind of just extended our internal access. There's still no external access for those objects in our village's storage depot or useful information via the BigQuery data workshop. For this we're going to make use of our fancy new(ish) ingress/egress policies.

Border control

We can think of I/E policies like a VIP list that a nightclub doorman might use to know who's allowed in (and out). By default we don't need our doorman because when we restrict a service, we close and lock the door, so no one comes in or goes out. As we previously stated, our villages do want some wider availability to some services that they offer, so we're going to need to create some rules to give to the doorman on specific doors to help them to know who they can let in and out.

Below is a description of a workflow that ultimately allows each village to sell their products.

- Village 1 watches out for important public notices (Pub/Sub)

- Village 1, with a special app (Compute Engine), processes the data which comes with those notices in a particular way which they know other people can use

- Village 1 packages that processed data and puts it into a storage depot (Cloud Storage)

- Village 1 pushes the processed data over to village 2's information gathering plant (BigQuery)

- Village 2 also adds data from another external partner the their information plant

- Village 2 allows access to analyse the combined data to an external customer

For steps 1 and 2 we need to create an egress rule for the doorman on the Pub/Sub door on the perimeter around village 1. Each VM on Google Cloud has an attached service account to identify the compute worker, so we can create a rule that tells the Pub/Sub doorman to allow only that compute worker access to read external topics.

Next, for step 3, our storage depot is happy for anyone to be able to take a copy of the objects stored there but we don't want people to be able to modify anything there. For this we create an ingress rule (on the same perimeter) which allows everyone access to collect things from the depot. This tells our doorman on the storage door to let anyone come in but they can only go down the path to the collections window of our storage depot.

For step 4, our compute worker can just use the bridge to access village 2, so no rules are needed for any doorman, just keys (IAM) to the workshop there.

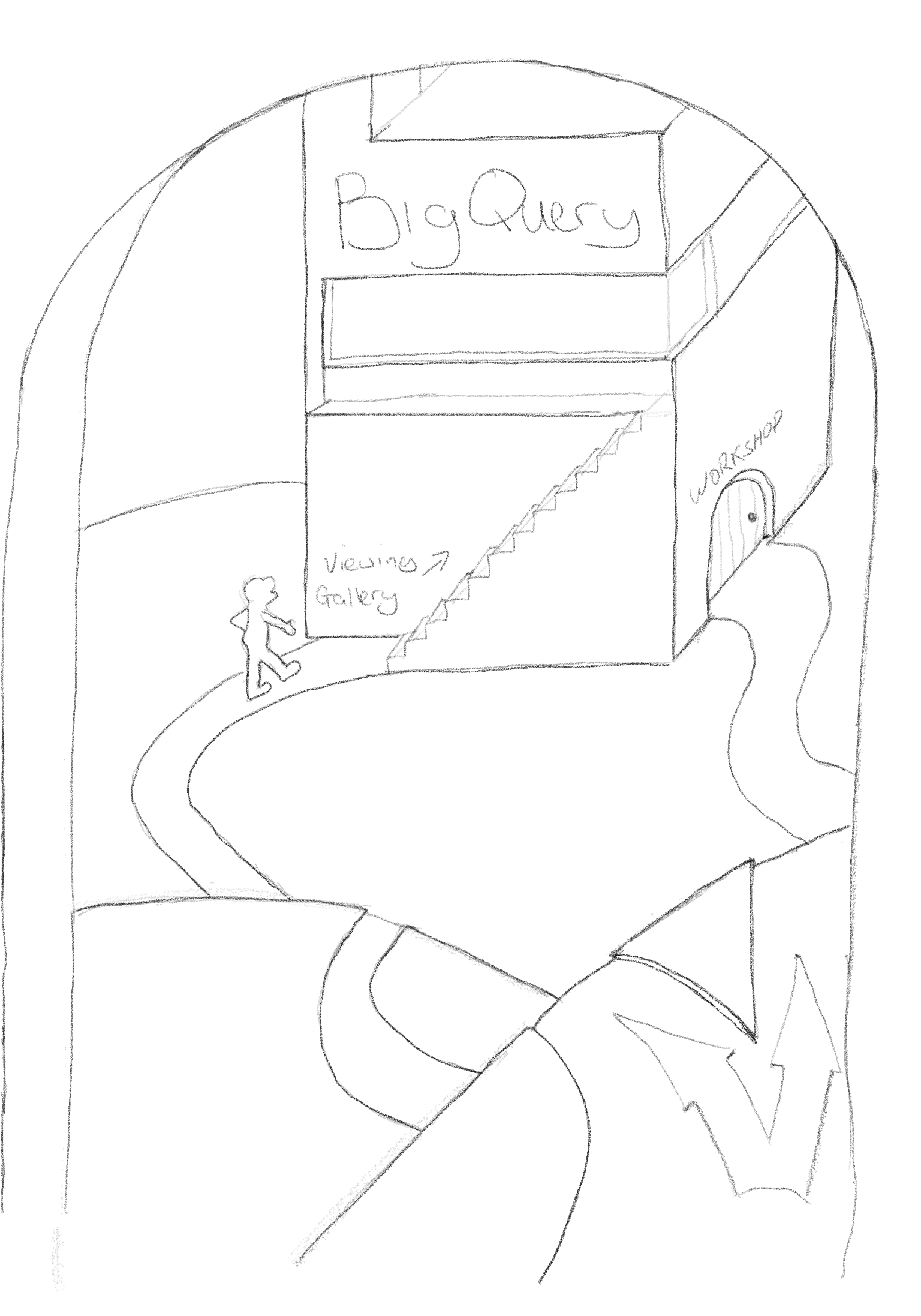

For village 2, they don't want just anyone to be able to run BigQueries on the data but do want a select few, so our BigQuery doorman on the perimeter around village 2 also needs a couple of rules to know who can access the BigQuery workshop.

Let's say for steps 5 and 6, the external locations happen to be 2 new villages. Village 3 who just wants to be able to view the data in a variety of ways, and village 4 who similarly will view the data but will also help to maintain and add data.

For each of these two villages we're going to create a profile (access level) that defines specific service accounts AND IP addresses which belong to them. These profiles define an approved source for access through the wall and we then reference those profiles on our ingress rules to add an extra level of specificity to the rule. It's also useful to define profiles like this because if either of those villages change any of their personal details, we can simply update their profile and have the change applied everywhere it's used.

Once we have our village profiles (access levels) in place, a doorman can lookup their details anytime he comes across a rule which references one. So let's create them.

The first ingress rule we will create is for village 3, which just wants to read data and query data. So we create a rule for the BigQuery door which only allows members of that rule to access the viewing gallery of the BigQuery workshop. This allows them look in on all the data from all angles so they can extract any information they're looking for, but not touch it. We then specify village 3's profile as the only members on that rule

The second ingress rule we create is for village 4 to also use the BigQuery door. This rule allows members to go down the path to the main, workers (admin) door of the BigQuery workshop. On that rule, this means we similarly allow read and query but further include access to add and update data as well. This rule has village 4's profile as the only member.

In summary, we've now added 4 I/E rules. 1 egress for Pub/Sub, 1 ingress for Storage, 2 ingress for BigQuery.

Conclusion

That about sums it all up. Our villages now have walls around them protecting their valuable assets. Both walls have all doors closed and we added a bridge for anyone in either villages to be able to get over the walls into the next village. We then stuck 3 doormen onto 3 doors, gave one of them a rule to allow out a special compute worker from village 1, gave 1 a rule to allow in anyone in but only let them go to the storage depot's collections window, and gave 1 rules to only allow in specific actors from villages 3 and 4 to access the BigQuery workshop

Technically we have the following setup:

- 2 Google Cloud projects. One with Storage, Compute and Pub/Sub services enabled; one with BigQuery service enabled

- 2 VPC-SC perimeters. One around each project. Both perimeters have all VPC-SC supported services restricted within

- 2 Access levels

- village-3: defining a set of IP addresses and service accounts which village-3 external source can use to access data

- village-4: defining a set of IP addresses and service accounts which village-4 external source can use to access data

- 1 egress rule defining the following:

- service: Pub/Sub

- methods: Pub/Sub subscribe methods

- from: compute service account from project 1

- 3 ingress rules:

- rule 1:

- service: Storage

- methods: read-only (list, get, etc) Storage methods

- from: the Internet

- rule 2:

- service: BigQuery

- methods: read-only (read, get, list, etc) BigQuery methods

- from: village-3 access level

- rule 3:

- service: BigQuery

- methods: read-only (read, get, list, etc) and write (insert, update, etc) BigQuery methods

- from: village-4 access level

- rule 1:

I had quite a bit of fun writing this article. Hope you enjoyed reading it

Further reading

The product overview can be found at https://cloud.google.com/vpc-service-controls.

The links below are pages I wanted to call out specifically from the documentation because they will be useful on your journey.

For a more technical example of secure data exchange like above: https://cloud.google.com/vpc-service-controls/docs/secure-data-exchange

To see which products from Google are supported and what limitations they may have: https://cloud.google.com/vpc-service-controls/docs/supported-products

for the supported services, which methods can be added to ingress and egress rules: https://cloud.google.com/vpc-service-controls/docs/supported-method-restrictions

When your seeing access issues for a project inside a perimeter, have a look at the troubleshooting guide: https://cloud.google.com/vpc-service-controls/docs/troubleshooting

VPC Accessible Services are important for controlling access to services from virtual machines on your VPCs. Network endpoints, like VMs are not protected in the same way as for VPC-SC and the topic is beyond the scope of this article. So if you will be using APIs from virtual machine, in combination with VPC-SC, you should look into the details of configuring Accessible Services, along with using Private Google Access and the Restricted VIP endpoint: https://cloud.google.com/vpc-service-controls/docs/vpc-accessible-services

If you would like to discuss any of these topics in more detail, please feel free to get in touch